The F42 AI Brief #052: AI Signals You Can’t Afford to Miss

Signals for builders: trust, workflow, data advantage.

Here’s your Monday dose of The AI Brief.

Your weekly dose of AI breakthroughs, startup playbooks, tool hacks and strategic nudges—empowering founders to lead in an AI world.

📈 Trending Now

The week’s unmissable AI headlines.

💡 Innovator Spotlight

Meet the change-makers.

🛠️ Tool of the Week

Your speed-boost in a nutshell.

📌 Note to Self

Words above my desk.

📈 Trending Now

⚖️ OpenAI Subpoenas Non-Profits – Legal Intimidation in the AI Sector.

→ OpenAI has issued sweeping subpoenas to at least seven non-profit organisations that publicly criticised its conversion from non-profit to for-profit, demanding donor names, internal communications and links to Elon Musk and Mark Zuckerberg.

→ The move has triggered global scrutiny: even within OpenAI voices (e.g., a public post by its head of mission alignment) and legal experts frame it as chilling dissent and raising questions about corporate ethics and governance.

→ Founders: If your “mission” is to benefit all of humanity but your boardroom responds to dissent with subpoenas, you’ve lost the narrative. Transparency and aligned incentives are your brand’s real guard-rails.

READ MORE »

The Altman Doctrine: How Billions Make Principles Negotiable

This article is based on public statements, court filings, regulatory documents, testimony from former OpenAI board members, and reporting from Reuters, Fortune, Bloomberg, Vice, and other credible outlets. All potentially defamatory claims are attributed to their sources.

🏙 Google Commits $15 B to AI Hub in India – Infrastructure Shift Gets Serious

→ Google LLC announced a $15 billion investment over the next five years to build its first major AI hub in Visakhapatnam, India — a gigawatt-scale data centre campus, subsea/fibre gateway, clean energy build-out and full AI stack deployment. Reuters+2blog.google+2

→ This is Google’s largest ever investment outside the U.S., signalling that infrastructure for AI is globalising fast — compute, talent and data localisation are no longer optional for global scale.

→ Founders: If you’re targeting APAC/MENA, factor latency, ecosystem incentives and sovereign-stack into your product and go-to-market strategy — being “global” means deeper than just translation.

READ MORE »

🎤 Taylor Swift Promo Sparks #SwiftiesAgainstAI Backlash

→ Music megastar Taylor Swift is facing a fan-revolt after promotional videos in her new album campaign appeared to contain tell-tale AI artefacts — distorted objects, unnatural shadowing, mismatched fingers — triggering the hashtag #SwiftiesAgainstAI.

→ The backlash resonates far beyond entertainment: it underscores how brand authenticity and creator trust are being undermined when AI-generated content is undisclosed to even the creator’s own audience.

→ Founders: If your product or brand uses AI-generated or assisted content, transparency isn’t optional — disclosure, explanation and human-in-loop matter more than ever for legitimacy.

READ MORE »

💼 Meta’s $1.5 B Talent Grab – War for AI Researchers Intensifies

→ Meta Platforms is heavily investing in attracting elite AI research and engineering talent, reportedly offering packages up to $1.5 billion over six years to one star researcher. The move signals talent is a front-line in the AI arms-race.

→ While compute and data remain essential, the clear message: human capital is becoming the differentiator in the next wave of generative and agentic AI systems.

→ Founders: You cannot win just by access to models or GPUs — your competitive edge may hinge on the people, the institutional culture and the unique domain knowledge you build around them.

🧠 Anthropic and U.S. Regulatory Fracture – A Divided AI Policy Landscape

→ Anthropic is pushing back on the U.S. federal government’s deregulatory “AI-fast-lane” agenda, contrasting it with state-level transparency laws and model safety commitments — marking a split between innovators and regulators.

→ The divergence signals that AI governance isn’t converging; rather, it is fragmenting by jurisdiction, business model and strategic alignment — startups and funders must design for regulatory complexity, not simplicity.

→ Founders: Craft your regulatory roadmap like your tech stack — weighted for flexibility, multicountry jurisdictions and scenario readiness. One size will not fit all.

🧪 Open-Source Model Surge – The Next Wave?

→ The push for open models (for example, Reflection AI raising large funding) shows open-source is no longer niche but a full-scale strategic path in the AI race.

→ While this is more “signal” than “story” this week (and falls into funding territory, which we normally exclude), the movement still carries outsized implications for ecosystem design, transparency and moat creation.

→ Founders: If you’re building on or around open models you’ll want to lean into community, licensing clarity, support-network and hybrid business models now — the terrain is heating up.

🛡️ Safety Story: Australia Warns AI Chatbots “Hurting Children”

→ The Australia Education Minister issued warnings that AI-chatbots are increasingly being used to bully children, encourage self-harm and spread harmful content — prompting a national anti-bullying initiative.

→ The incident underscores that safety and real-world harm are no longer theoretical — misuse of generative systems is moving into mainstream policy, not just tech labs.

→ Founders: If you touch youth markets or high-risk verticals (health, learning, social) you need build-in robust usage monitoring, incident-response workflows and safety-by-design — the policy world is catching up.

💡Innovator Spotlight

👉 Modular “Skills” for Claude – Custom AI Modules from Anthropic

👉 Who they are:

– Anthropic, an AI company focused on next-gen models and agentic workflows.

👉 What’s unique:

– They’ve released a public repository named “Skills” — folders of instructions, scripts and resources that Claude can load dynamically to for specific tasks such as document formatting, brand-compliance, or spreadsheet automation. Venturebeat+3GitHub+3Anthropic+3

– These modules are composable, portable (usable across Claude apps, Code and API) and designed to give agents organisational-context rather than generic capability. That means you can teach Claude your workflow once and reuse it. Anthropic+1

👉 Pinch-this lesson:

– Build your AI product not just as “model + data” but “model + domain skillset” that mirrors your workflow—turn your knowledge assets into reusable modules no one else has.

👉 Source:

https://github.com/anthropics/skills

🛠️ Tool of the Week

1. Anthropic Skills

What it does: Modular instruction folders that teach Claude specific workflows—document creation, data analysis, web scraping—with full version control and transparency.

Why founders should care: Turn repetitive research tasks into reproducible skills anyone on your team can execute identically, cutting training time to zero.

Quick start tip: Fork the PDF extraction skill from GitHub, test it on your last three customer contracts, then build a custom skill for your onboarding workflow.

2. Benchling Automation Designer

What it does: Drag-and-drop interface for building data pipelines that auto-trigger on file uploads, parse results, and run Python/R analysis without leaving the platform.

Why founders should care: “Zero-click” experiment workflows mean your team runs more tests faster—work that took days now completes overnight.

Quick start tip: Sign up for Benchling’s free tier, connect one data source via API, then build your first automated pipeline using their visual designer.

3. FutureHouse PaperQA

What it does: AI agent searches millions of research papers, synthesises findings with citations, and answers questions like “has anyone tested this hypothesis before.”

Why founders should care: Competitive research and prior art searches that used to take weeks now finish in minutes with properly cited sources.

Quick start tip: Visit the platform, paste your core product hypothesis, and see if PaperQA finds research validating or contradicting your assumptions.

4. Julius AI Data Analyst

What it does: Chat interface that analyzes spreadsheets, generates visualizations, and runs statistical tests on any data format without requiring coding skills.

Why founders should care: Your entire team can query product/customer data conversationally—democratizing data analysis beyond your technical hires.

Quick start tip: Upload last month’s product metrics CSV, ask “what’s causing our churn spike,” and let Julius run correlation analysis automatically.

5. Microsoft Copilot Studio Wave 2

What it does: No-code platform for building multi-agent AI systems that collaborate across Microsoft 365—automating research, scheduling, and reporting workflows.

Why founders should care: Build custom AI agents that execute your exact research protocols without hiring developers or data scientists.

Quick start tip: Use the free trial to create one agent that automatically compiles weekly competitive intelligence from specified sources into a Sharepoint report.

6. Consensus Evidence Search

What it does: AI searches peer-reviewed papers to answer research questions with aggregated scientific evidence showing field-wide agreement levels.

Why founders should care: Validate product claims, understand market trends, or research competitive approaches backed by actual academic consensus, not marketing fluff.

Quick start tip: Ask “does [your core feature] improve [target outcome]” and use the consensus meter to strengthen your pitch deck with evidence.

7. PowerDrill AI

What it does: Upload files and interact with data through conversational interface—ask questions, get visualizations, run analyses without writing code.

Why founders should care: Non-technical team members analyze customer feedback, survey results, or usage data instantly without waiting for engineering support.

Quick start tip: Drop your last user survey into PowerDrill, ask “what are the top 3 pain points by segment,” and export the chart for your roadmap meeting.

8. Iris.ai Research Assistant

What it does: Combines keyword extraction, neural topic modeling, and semantic search to help R&D teams discover opportunities in published research.

Why founders should care: Double your team’s research productivity by automatically surfacing relevant academic papers and patents your competitors might have missed.

Quick start tip: Input your product category and core technology, let Iris map the research landscape, then explore gaps where no one’s published yet.

9. Avidnote Research Platform

What it does: Private AI ecosystem integrating literature review, deep analysis, interconnected notes, and writing—all encrypted and never used for training.

Why founders should care: Maintain IP security while using AI for competitive research, patent analysis, or market studies without risking data leaks.

Quick start tip: Start a free account, import five competitor whitepapers, and use AI analysis to extract their technical positioning without exposing your strategy.

10. Coupler.io Data Sync

What it does: Automates data syncing from 70+ apps into Sheets, Excel, or BigQuery with 15-minute update intervals and built-in transformation.

Why founders should care: Unify product, marketing, and sales data into one automated dashboard—no engineering time required for ETL pipelines.

Quick start tip: Connect Stripe + Google Analytics + HubSpot to one Google Sheet, schedule hourly syncs, then build your growth dashboard in under 30 minutes.

📌 Note to Self

F42 GLOBAL SUPER ACCELERATOR

GSA II · Fusion42 — The Truths This Week and all the replays - Plus what is happening next week.

18 October 2025 at 9:30 · GSA 2

What a great week that was. Big thank you to all that showed up , our great speakers, all the great comments we received and we hope you got some great value.

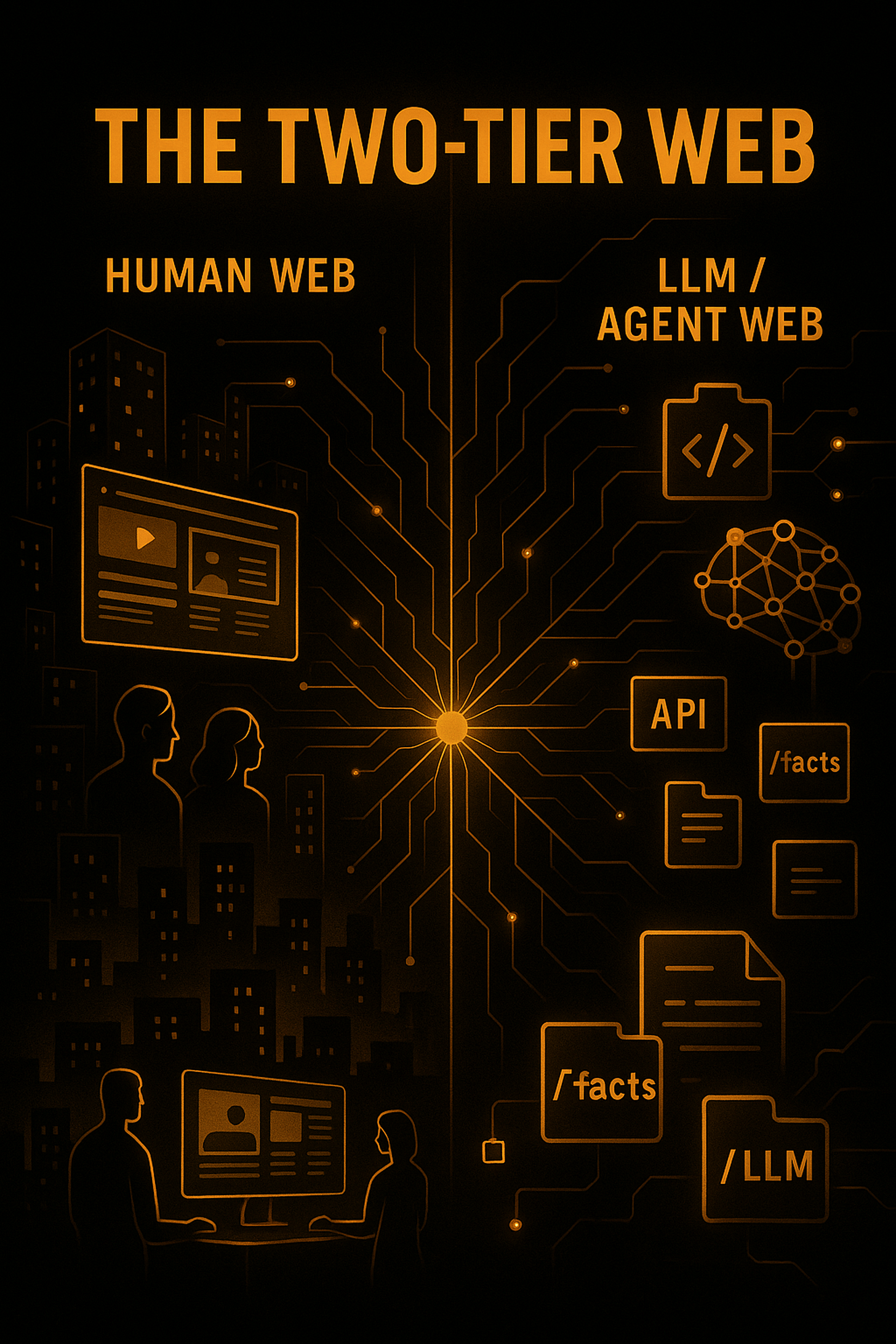

⚙️ The Two-tier web

The web is splitting into two layers: human-facing stories that persuade and convert, and machine-facing facts that LLMs and agents retrieve, parse, and act on. To stay discoverable (and quotable) in AI answers, you must keep pages persuasive for people while making your facts clean, stable, and machine-readable.

Key takeaways:

Publish llms.txt and a public facts page (pricing, limits, security, contacts, “Last updated”).

Add Schema.org JSON-LD (Org, Product, FAQ), keep URLs stable, update sitemap

<lastmod>.Track AI referrals with

?utm_source=llmand a GA4 AI/LLM channel; expose OpenAPI/GraphQL so agents can act.Quick test: paste your facts into ChatGPTPerplexity → click → check GA4 Realtime.

Read the full actionable article here

⚙️ GTM Systems & Loops | The ICP Myth — Levi McPherson

ICP isn’t a persona; it’s a living pattern of pain + desired outcome. With AI commoditising the “how,” advantage shifts to 10× outcomes, ruthless scoring, cross-model validation, and faster feedback loops. Start broad with LLM-assisted segmentation, then re-validate in the real world.

Convert ICP to 3–5 measurable pains and outcomes; hunt adjacencies, then interview/pilot to confirm.

Set 10×/100× expectations; enforce a scoring stack (stack-rank → probability 0–1 → ship/kill).

Cross-model big calls to surface bias; use negative prompts to break plans, then re-score.

Story retell test: if prospects can’t repeat your one-paragraph story at ≥80% fidelity, rewrite it.

🔁 If you missed it, the replays here:

🧭 GTM Systems & Loops | Customer-Journey Archaeology — Levi McPherson

Build “inevitability ladders” by scoring the journey against four human drivers—Security, Belonging, Growth, Meaning (S/B/G/M). Map the checkpoints (Pain that matters → Tellable story → Authority to tell it → Why now), detect drift when meaning/novelty fade, and keep value compounding.

Score each pain on S/B/G/M (1–10); hit 3 of 4 at real strength or you’re fragile.

Instrument drift detection (falling delight, novelty decay, weaker retell); run ship → score → iterate loops.

Do a monthly audit: if the value delta isn’t growing, refresh meaning—or risk decline.

Treat GTM as daily practice: every feature, call, and asset feeds the loop.

🔁 If you missed it, the replays here:

This is what we have lined up for next Week of 20 Oct

Tue 21 Oct — Fundraising Reality & Readiness → https://lu.ma/ayq25xmq

Thu 23 Oct — Fundraising Reality & Readiness | Pitch Kung Fu → https://lu.ma/cdk76ai8

Fri Huddles (F42+ only): EMEA/Asia → https://lu.ma/91m4x9yn • Americas → https://lu.ma/rga38ohb

Startup Founder’s Handbook_ Mastering the Cap Table (2).pdf

WHAT YOU’LL SHIP (outcomes, not theatre)

AI-native stack live: tools wired, workflows automated

GTM in motion: ICP, offer, multi-channel outreach running

Investor-ready: tighter narrative, sharper numbers, confident Q&A

Compounding reps: weekly pitch practice + accountability

Foundations locked: founder alignment & governance, finance controls, legal/IP hygiene, disciplined hiring

CALENDAR LOGIC (no faff)

If you’ve registered or joined GSA calls, we’ll add you to this week’s sessions and the events will drop into your calendar automatically.

How your calendar gets it:

We add you → a standard .ics invite is emailed and should auto-add in Outlook Apple Google.

You register via the links → the confirmation email sends the same .ics.

Didn’t see it? Check Spam/Promotions. If your app doesn’t auto-add, open the email and click Add to calendar (or download the .ics and open it).

Stuck? Quick troubleshooting guide

HOUSE RULES

Be on time. Cameras on.

Work the work: we build live; you finish in the week.

Ask early: blockers kill momentum — flag them fast.

FOR THE ❤️ OF STARTUPS

Thank you for reading. If you liked it, share it with your friends, colleagues and everyone interested in the startup Investor ecosystem.

If you've got suggestions, an article, research, your tech stack, or a job listing you want featured, just let me know! I'm keen to include it in the upcoming edition.

Please let me know what you think of it, love a feedback loop 🙏🏼

🛑 Get a different job.

Subscribe below and follow me on LinkedIn or Twitter to never miss an update.

For the ❤️ of startups

✌🏼 & 💙

Derek