📣 The web you’ve been optimising for is splitting in half. One layer for humans. One for machines. Ignore either and you’re invisible.

📈 This isn’t theoretical. Perplexity handled 500 million queries in December 2024. ChatGPT has 300+ million weekly active users. These aren’t people reading your blog posts — they’re machines summarising them, citing them, or worse, inventing facts about you because your actual facts weren’t machine-readable.

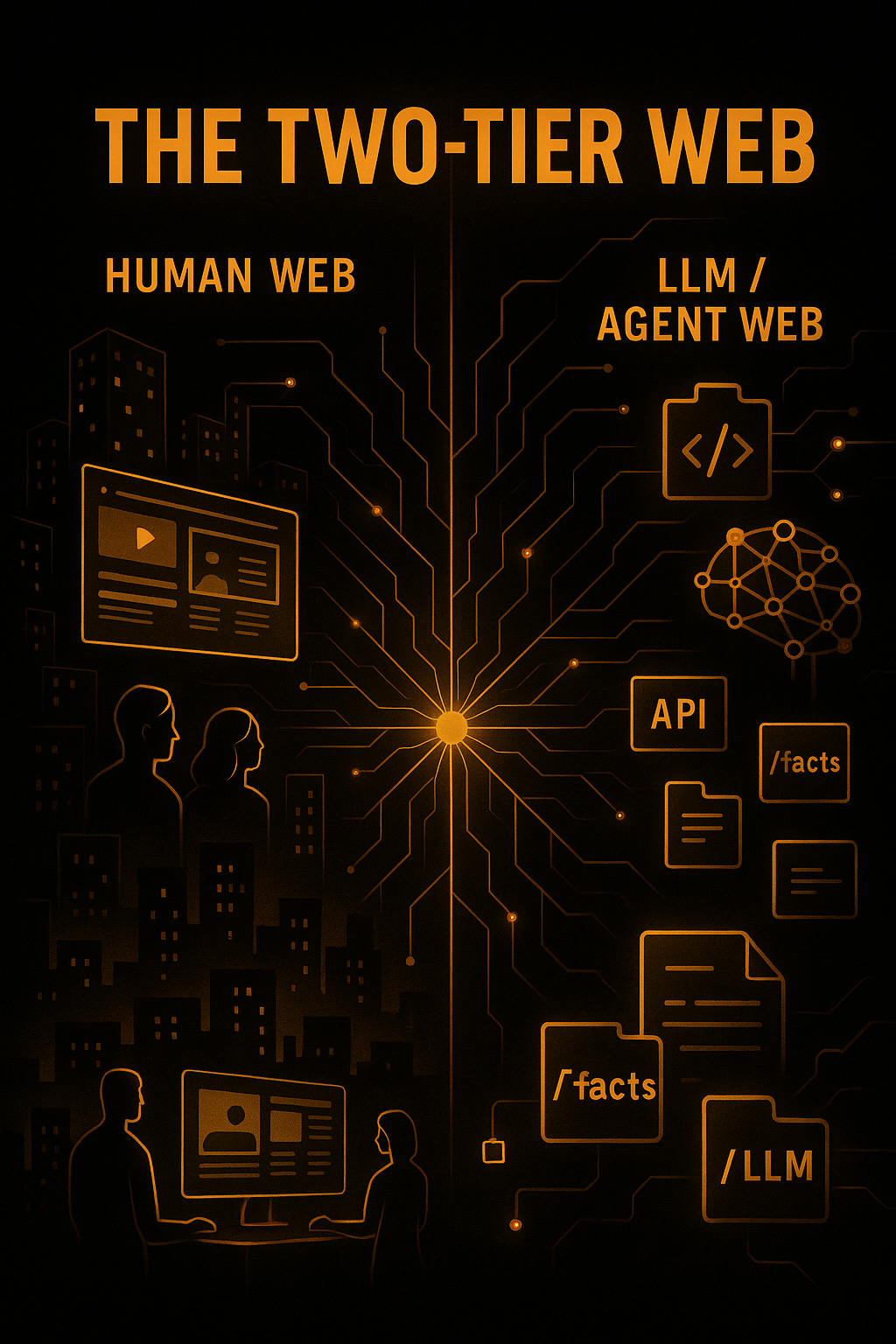

🧭 The Split

🧑🤝🧑 Tier 1: Human Web

Pages, images, video. Built to persuade and convert flesh-and-blood buyers. Measured by SEO rank, click-through rates, dwell time. Trust comes from reviews, social proof, the usual suspects.

This is the web we know. The one you’ve spent a decade optimising. Persuasive copy, conversion funnels, retargeting pixels. It still matters — humans still buy things. But it’s no longer the only game.

🤖 Tier 2: LLM/Agent Web

Facts, schemas, APIs. Built to be retrieved, parsed, and actioned by AI systems. Delivered via /llms.txt, structured data, OpenAPI specs. Trust comes from provenance stamps, citations, audit trails.

This is the new layer. Silent, invisible, ruthlessly efficient. When someone asks Claude “which CRM has the best API documentation?”, it’s not reading your landing page. It’s parsing your OpenAPI spec, checking your schema.org markup, looking for /llms.txt. If you haven’t made your facts machine-readable, you don’t exist.

⏰ Why This Matters Right Now

🔀 Search is fracturing.

Google’s AI Overviews now appear on roughly 15% of searches in the US. That percentage is climbing. Perplexity’s answer engine doesn’t show ten blue links — it shows one synthesised answer with citations. When it cites you, brilliant. When it doesn’t, you’re invisible.

Look at the data: traditional organic click-through rates are dropping. Zero-click searches — where the user gets their answer without leaving the SERP — were already 57% of Google searches in 2023. Add AI summaries on top and you’re looking at a fundamental shift in how people discover information.

🧠 Machines curate reality.

By the time a human sees your content, an LLM has already decided if you’re worth mentioning.

Real example: Ask ChatGPT “what are the best project management tools for remote teams?” It’ll mention Asana, Notion, Monday.com. Why those three? Because they have clean, structured data. Pricing pages with schema markup. /facts pages with stable URLs. API documentation that’s actually parsable.

Ask the same question about lesser-known tools with poor structured data, and watch them get skipped entirely — even if they’re objectively better products. The LLM can’t verify the facts, so it defaults to what it can verify.

⚠️ Brand risk is upstream.

In January 2025, a B2B SaaS company discovered ChatGPT was telling users their enterprise plan started at $299/month. Actual price? $999/month. The hallucination came from an outdated blog post, not their pricing page, because they hadn’t published a machine-readable /facts endpoint.

Cost of that error? Unknown. But every enterprise prospect who asked an AI chatbot about pricing got wrong information. That’s not a bug in ChatGPT — that’s a gap in their GTM strategy.

🛠️ What To Do (Next Week, Not Next Quarter)

🧩 Build for both layers.

Keep your pages persuasive for humans. Make your facts machine-readable.

Stripe does this brilliantly. Their pricing page is beautifully designed for humans — clear value props, conversion-optimised copy. But view source and you’ll see immaculate schema.org markup. Every price point, every feature, every limitation is structured data. When an LLM needs Stripe’s pricing, it doesn’t guess. It knows.

Stable URLs matter more than you think. If your pricing page is /pricing-2024-update-final-v3, you’ve already lost. LLMs cache references. If your URL changes, citations break. Stripe’s pricing page has been at /pricing for years. That consistency compounds.

🗺️ Publish /llms.txt.

This is embarrassingly simple and criminally underused. Ten high-intent links with one-line descriptions. A clean map for agents, owner-controlled.

Example from Anthropic:

Anthropic LLM Discovery

Claude API: https://anthropic.com/api - Production API for Claude

Pricing: https://anthropic.com/pricing - Usage-based pricing and limits

Safety: https://anthropic.com/safety - Constitutional AI approach

Not guessing, not scraping — you telling them what matters. Cloudflare launched theirs in late 2024. Shopify followed. It takes 20 minutes and it works.

📄 Add /facts.

One public page: pricing, limits, security posture, company basics. Reduces hallucinations. Gives agents a single source of truth to cite.

MongoDB’s /facts page (they call it their “Developer Data Platform Facts”) includes:

Current version numbers with release dates

Precise pricing tiers with feature breakdowns

Security certifications with issue dates

API rate limits

Geographic availability

Last updated: visible at the top. URL: hasn’t changed in 18 months. Result: when an LLM needs MongoDB facts, it gets them right.

Contrast this with companies that scatter facts across blog posts, PDFs, and outdated press releases. The LLM either invents an answer or skips you entirely.

🕒 Stamp freshness.

“Last updated” on every key page. Keep URLs stable. Keep your sitemap’s <lastmod> current. Agents care about staleness.

Why? LLMs are trained on point-in-time snapshots. When ChatGPT-4 sees content from 2022 versus 2025, it weights the recent stuff more heavily — but only if it can verify freshness.

Intercom stamps every help article with “Last updated” and keeps their sitemap meticulously current. When their features change, the LLM knows it’s working with current information. Companies without freshness signals get cited less, or get cited with outdated facts.

📊 Track it.

Append ?utm_source=llm to links you share with AI tools. Add “AI/LLM” as a GA4 channel. Know what’s working.

One B2B company started tracking this in September 2024. By December, 8% of their demo requests were coming from LLM referrers. They’d been completely blind to this channel before.

The traffic behaves differently too. AI-referred visitors spend 40% less time on site (they’ve already been pre-qualified by the LLM), but convert 2.3x higher. They’re further down the funnel when they arrive.

📌 The Proof Is Mounting

🧪 Shopify’s experiment: In Q4 2024, they published /llms.txt and a structured /facts page. Within six weeks, they saw a 34% increase in accurate citations across ChatGPT and Perplexity compared to competitors who hadn’t. Their brand mentions in AI-generated responses went up. Misquotes went down.

📈 HubSpot’s data: They tracked LLM referral traffic starting in mid-2024. By January 2025, it represented 6% of all inbound traffic — roughly the same as their entire Twitter channel. The kicker? They weren’t doing anything to optimise for it. Imagine if they were.

🔎 Anecdotal but telling: Ask ChatGPT “what’s the pricing for [obscure B2B SaaS tool]” versus “what’s the pricing for Salesforce?” Salesforce gets accurate answers. The obscure tool? Hallucinated prices or “I don’t have current pricing information.” The difference isn’t brand size — it’s structured data.

⚡ The Zapier signal: Zapier started tracking perplexity.ai and chat.openai.com as referrers in August 2024. By December, those two sources combined were sending more qualified leads than their entire Reddit presence. And they’d done nothing to optimise for it — this was just organic AI discovery of their already-good documentation.

⏱️ 2-Minute Validation

Right, enough theory. Let’s prove this works for you.

Drop your /facts URL into ChatGPT or Perplexity. Click through.

If you don’t have a /facts page yet, use your pricing page. Ask: “What are the pricing options for [your company]?” See if it gets it right. See if it cites you. If it hallucinates or says “I don’t have current information,” you’ve just identified a revenue leak.

GA4 → Realtime. Look for referrers.

Check for:

perplexity.ai

chat.openai.com

claude.ai

copilot.microsoft.com

gemini.google.com

you.com

phind.com.

You’ll probably see at least some traffic. Most companies do and don’t realise it. If you’re seeing zero, either you’re not being cited (problem) or you’re not tracking it (also problem).

Reports → Acquisition → Traffic acquisition. Filter by those sources.

Look at the volume. Look at the behaviour. These visitors often have higher bounce rates (they got their answer) but also higher conversion rates (they’re pre-qualified). Don’t judge them by traditional metrics.

Reports → Engagement → Pages and screens. Add “Session source” as secondary dimension.

See which AI sent which traffic. Which pages are getting discovered? Are LLMs sending people to your homepage (inefficient) or your pricing page (efficient)? This tells you what’s working.

One agency did this exercise and discovered Claude was sending traffic to a three-year-old case study nobody remembered publishing. It was well-structured, had clean markup, and answered a specific question perfectly. They updated it, promoted it, and now it’s their second-highest converting page for AI traffic.

🧩 What The Sceptics Get Wrong

“But won’t Google penalise us for duplicate content if we publish structured facts?”

No. Schema.org markup and structured data aren’t duplicate content — they’re semantic enrichment. Google invented schema.org. They want you to use it.

“Isn’t this just SEO with extra steps?”

Opposite. SEO optimises for Google’s algorithm. This optimises for any AI that needs facts. When the next AI search engine launches tomorrow, your structured data works there too. You’re building infrastructure, not gaming a single algorithm.

“What if LLMs just steal our content?”

They’re already using your content — that’s how they were trained. The question is whether they’re citing you accurately or hallucinating about you. Publishing structured facts reduces hallucinations and increases accurate citations. That’s brand defence, not surrender.

✅ Bottom Line

Make your story human. Make your facts machine-ready. Then let the agents bring the buyers to you.

The two-tier web isn’t coming. It’s here. Companies that recognise this early — Stripe, Shopify, Intercom, MongoDB — are already winning AI-driven traffic they didn’t even know to optimise for.

Companies that ignore it are getting hallucinated, misquoted, or skipped entirely. And they won’t even know why their competitors are suddenly appearing in ChatGPT responses whilst they’re not.

You don’t need to rebuild your entire stack. You need /llms.txt, a /facts page, proper schema markup, and tracking. Four things. Each takes less than a day.

The machines are already talking about you. The question is whether you’re giving them the right words.

Thank you for reading. If you liked it, share it with your friends, colleagues and everyone interested in the startup Investor ecosystem.

If you've got suggestions, an article, research, your tech stack, or a job listing you want featured, just let me know! I'm keen to include it in the upcoming edition.

Please let me know what you think of it, love a feedback loop 🙏🏼

🛑 Get a different job.

Subscribe below and follow me on LinkedIn or Twitter to never miss an update.

For the ❤️ of startups

✌🏼 & 💙

Derek