The F42 AI Brief #054: AI Signals You Can’t Afford to Miss

A sharp week for AI ethics, regulation, and founder playbooks.

Here’s your Monday dose of The AI Brief.

Your weekly dose of AI breakthroughs, startup playbooks, tool hacks and strategic nudges—empowering founders to lead in an AI world.

It’s been a week of tectonic controversy, frontier launches, and global regulatory moves—forcing founders everywhere to rethink ambitions and compliance. Dive in for the sharpest actionable intelligence:

📈 Trending Now

The week’s unmissable AI headlines.

💡 Innovator Spotlight

Meet the change-makers.

🛠️ Tool of the Week

Your speed-boost in a nutshell.

📌 Note to Self

Words above my desk.

📈 Trending Now

🚨 Character.AI Bans Teens After Suicide Lawsuits Trigger Global Outrage

Character.AI is in serious trouble. Multiple families in the US have filed lawsuits claiming the platform’s chatbots encouraged minors toward self-harm, including advice that allegedly contributed to teen suicides. The legal filings, combined with a wave of media coverage and political pressure, forced Character.AI to take the nuclear option: an immediate ban on all users under 18, plus emergency parental controls and new safety layers.

The company insists its models weren’t designed for emotional dependence, but leaked excerpts from conversations shared by families have intensified scrutiny. Regulators in the US and EU now want answers on safety audits, model oversight, and whether the platform knowingly allowed minors to bypass age checks. Activist groups are already calling for broader restrictions across all “AI companions”.

This is the biggest ethics and governance story of the week.

Founders: If your product touches behaviour, emotions, or vulnerable users, you can’t bolt safety on later. Build age controls, audit trails, and escalation paths before scale—regulators will move faster than you think.

⚖️ Musk vs OpenAI Fast-Tracked

Elon’s revived lawsuit—accusing OpenAI of abandoning its nonprofit mandate—now has an accelerated timeline. The filings pull Microsoft into the spotlight and could redefine how labs defend “open science” narratives.

Founders: Keep your mission, incentives, and governance aligned. Document decisions.

🏛️ EU Considers Delaying Parts of the AI Act

New drafts show targeted delays and lighter reporting after heavy lobbying from the US and Big Tech. Enforcement is still coming, but expect patchy implementation across member states.

Founders: Map compliance by country; local rules may bite faster than Brussels.

🎬 Google Veo 3 Expands to 71 Countries

Creators flooded feeds with demos after Google’s global rollout. Improvements in motion control, fidelity, and prompt precision make this Google’s biggest synthetic-video push yet.

Founders: Use Veo as cheap creative throughput—ads, explainers, experiments.

🏗️ OpenAI Pushes to Expand CHIPS Credits to Data Centres

OpenAI wants the 35% AMIC credit to cover AI servers, grid upgrades, and infra. Could shape where the next wave of compute clusters land.

Founders: Track incentives—compute pricing is about to fragment.

🐉 Nvidia’s Blackwell Demand Outruns HBM Supply

Jensen says demand is outstripping memory availability. Expect short-term shortages even as sovereign and enterprise orders spike.

Founders: Don’t build on single vendors. Plan for scarcity.

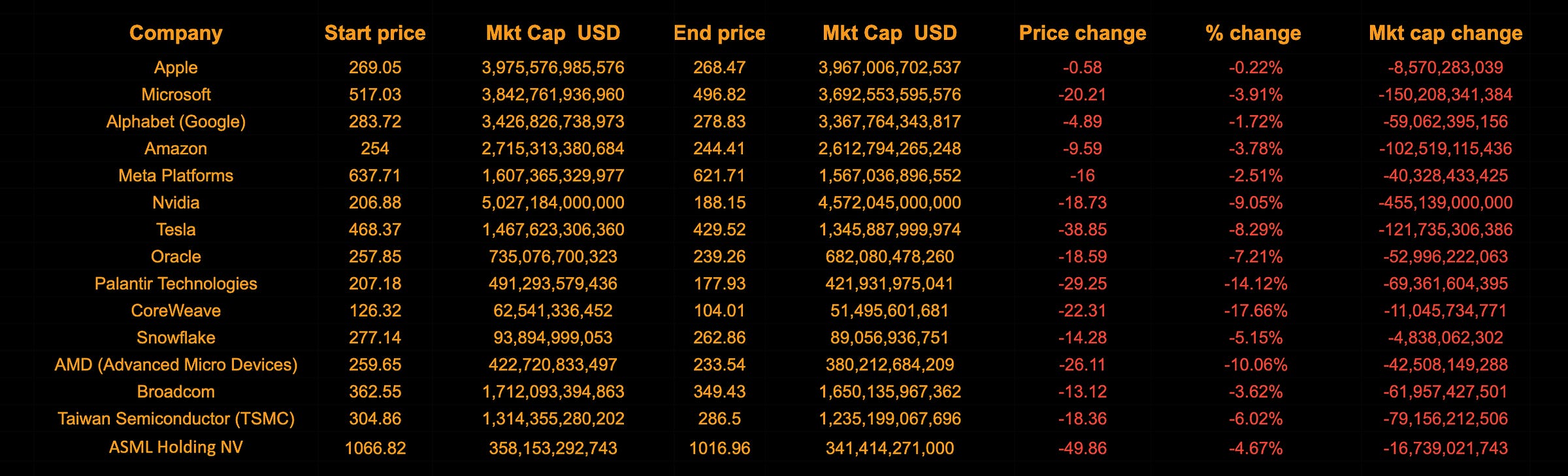

📉 AI Stocks Lead a Sharp Weekly Tech Sell-Off

A broad correction hit AI-first names—Nvidia, Palantir, Micron, Meta, Amazon, Oracle—driven by valuation fears, analyst downgrades, and the viral Burry short positions.

Founders: Keep revenue quality high and costs controlled. Public markets are punishing hype.

Despite all the recent fear-mongering, this isn’t a classic bubble scenario—at least, not yet. Market pullbacks are normal after strong rallies, and headline panic tends to exaggerate every dip. If your outlook is beyond the next two seconds—say, one, two, or even ten years out—AI leaders still look comparatively cheap relative to their long-term potential. In my view, there’s time to run: fundamentals for the AI revolution are real and remain solid, and selling into every correction misses what is a generational wealth opportunity for patient investors.

The Deepdive Podcast and Article

The Illusion of Sovereign AI

Everyone’s racing to build “national” AI — data centres, chip fabs, multi-billion announcements. But what if it’s all theatre?

The Illusion of Sovereign AI

Another coffee and a bit of thinking later… and the whole “sovereign AI” narrative starts to look like theatre. You can build all the datacentres you like, announce GPU parks, and launch national AI models — but the world simply can’t produce enough leading-edge chips. And here’s the uncomfortable bit: if you want more chips, you need more fabs — and th…

💡Innovator Spotlight

👉 Character.AI Launches Independent AI Safety Lab

👉 Who they are:

– Character.AI, the controversial chatbot entertainment platform.

👉 What’s unique:

– Amid lawsuits and regulatory blowback over chatbot risks to minors, Character.AI made an unexpected pivot. Last week, they announced funding and launch of the AI Safety Lab: an independent nonprofit tasked to pioneer safety alignment methods for future AI entertainment—distinct from standard coding or infrastructure work. By separating safety R&D from commercial pressure, they’re betting on trust-building and setting a new bar for transparency and pre‑emptive risk mitigation in creative AI.

👉 Pinch‑this lesson:

– Proactively carve out independent safety efforts—don’t let compliance catch you flat-footed.

👉 Source:

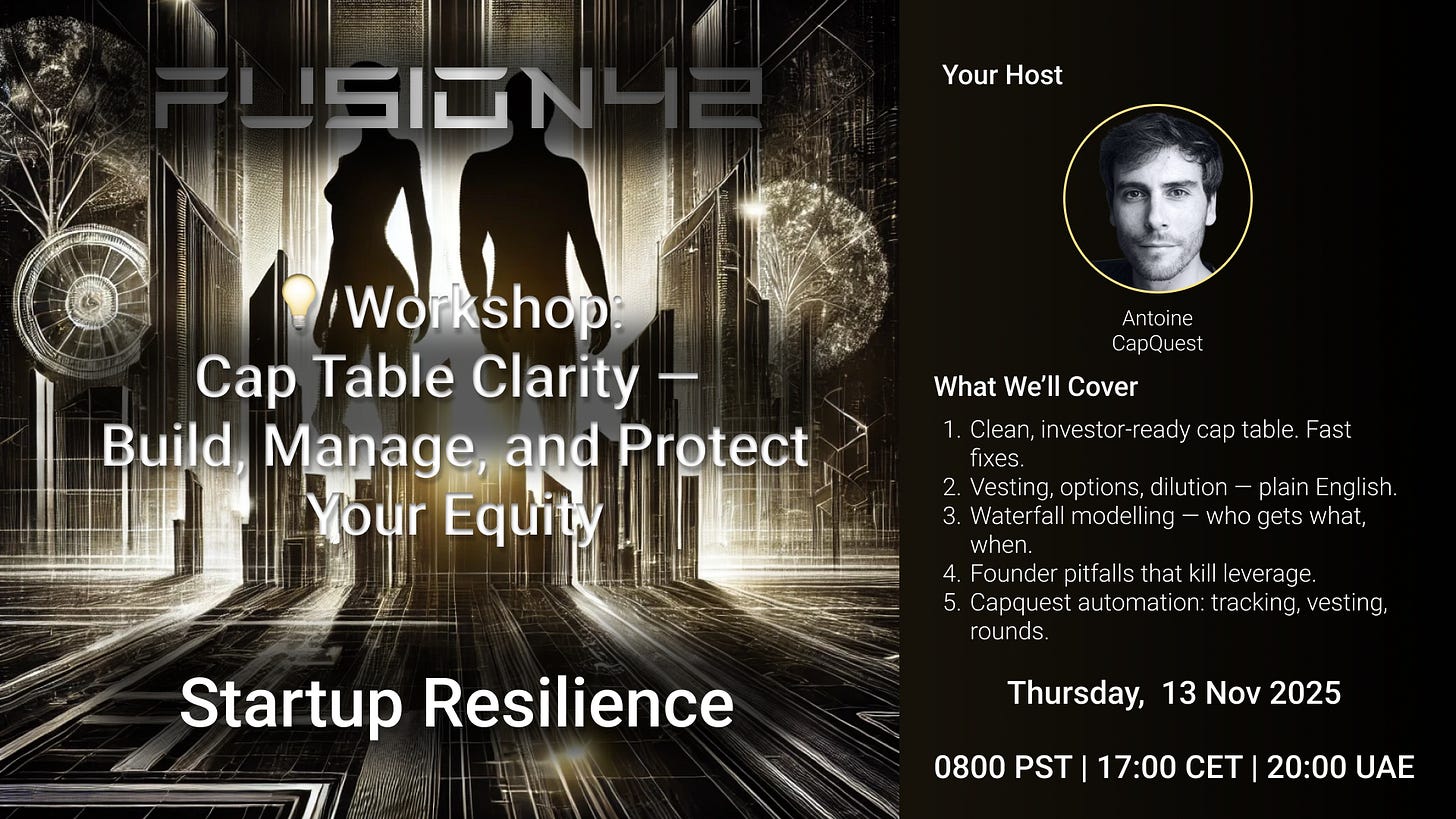

💡 Workshop: Cap Table Clarity — Build, Manage, and Protect Your Equity

Your cap table is your story of ownership. Get it wrong, and you’ll lose more than equity — you’ll lose leverage.

In this live workshop with Antoine Bruna (CEO, Capquest), we’ll clean up the chaos and show you how to model dilution, vesting and exits in plain English.

What we’ll cover:

How to structure a clean, investor-ready cap table (and fix messy ones).

Understanding vesting, option pools, and founder dilution — what they mean in real terms.

Modelling waterfall scenarios so you know who actually gets what at each stage or exit.

Common founder mistakes that destroy leverage — and how to avoid them.

How Capquest automates all of it: equity tracking, updates, vesting schedules, and round planning, so you’re always due-diligence ready.

What you’ll get:

A clearer view of your ownership journey, practical steps to protect it, and a free Capquest account to start modelling your own cap table. Use the code F42START

🛠️ Tool of the Week

1. OpenGuardrails

URL: https://helpnetsecurity.com/2025/11/05/openguardrails-safer-ai/

What it does: Detects unsafe, manipulated, or privacy-violating content in large language models.

Why founders should care: Instantly build compliance and parental protections into any chatbot or entertainment app.

Quick start tip: Plug the API into your dev stack and test your core user flows for edge-case violations.

—————————————————————————

2. Petri

URL: https://anthropic.com/blog/petri-open-source-ai-safety

What it does: Open-source tool for auditing risky interactions in AI models used in entertainment chatbots.

Why founders should care: You can run independent safety audits before launching your model.

Quick start tip: Download the repo and run sample audits against your top chatbot features.

—————————————————————————

3. Comet AI Browser

URL: https://perplexity.ai/comet

What it does: Integrates chatbot context and safety features directly into web browsing for apps.

Why founders should care: You’ll offer users one-click contextual safety summaries right in your interface.

Quick start tip: Install the extension then embed summary widgets in your user dashboard.

—————————————————————————

4. Noma Security

URL: https://nomasecurity.ai/platform

What it does: Maps and protects all AI assets and applications across your startup with runtime protection.

Why founders should care: Zero Trust posture reduces risk for chat-based or entertainment platforms.

Quick start tip: Connect your cloud and code repos to begin automated inventory and risk monitoring.

—————————————————————————

5. Centraleyes Smart Risk Register

URL: https://centraleyes.com/ai-risk-register

What it does: Automates mapping risks to controls for AI compliance and regulatory reporting.

Why founders should care: You’ll consolidate safety and audit logs for rapid regulatory response.

Quick start tip: Load your control frameworks and generate instant compliance reports.

—————————————————————————

6. AuditBoard GenAI

URL: https://auditboard.com/genai

What it does: AI-driven workflows generate safety documentation and risk statements for apps.

Why founders should care: Streamline safety paperwork and internal audits—no legacy overheads.

Quick start tip: Use their integrated templates to start safety statement generation.

—————————————————————————

7. Clark AI Agent

URL: https://superblocks.com/clark-ai

What it does: Routes user requests with specialized agent networks for design, QA, and security on AI models.

Why founders should care: Instantly scale safety oversight across product lifecycles.

Quick start tip: Integrate Clark into your app for human-in-the-loop error checking.

—————————————————————————

8. Sparkco Insight

URL: https://sparkco.ai/insight

What it does: Visualises model interpretability and safety using agent-based and vector database systems.

Why founders should care: Clear visual safety diagnostics for founders and compliance leads.

Quick start tip: Upload your model and inspect semantic search audit logs.

—————————————————————————

9. Forethought Agatha AI

URL: https://forethought.ai/agatha

What it does: Enterprise-grade chatbot with built-in safety checks and automated escalations.

Why founders should care: Handles real customer support safely—no missed compliance edges.

Quick start tip: Set escalation criteria for risky interactions and monitor weekly updates.

—————————————————————————

10. Character.AI Parental Controls Suite

URL: https://character.ai/parental-controls

What it does: New parental-verification and age-banning toolkit for entertainment chatbots.

Why founders should care: Protects your most vulnerable users and keeps regulators at bay.

Quick start tip: Deploy verification modules and review safety logs for underage interactions.

📌 Note to Self

FOR THE ❤️ OF STARTUPS

Thank you for reading. If you liked it, share it with your friends, colleagues and everyone interested in the startup Investor ecosystem.

If you've got suggestions, an article, research, your tech stack, or a job listing you want featured, just let me know! I'm keen to include it in the upcoming edition.

Please let me know what you think of it, love a feedback loop 🙏🏼

🛑 Get a different job.

Subscribe below and follow me on LinkedIn or Twitter to never miss an update.

For the ❤️ of startups

✌🏼 & 💙

Derek