The Application Layer Playbook (Why You Should Build)

Part 5 of 5: The Great AI Infrastructure Build-Out of 2025

Let me be direct: If you read Parts 1-4 and concluded “I should sit this out,” you misunderstood who the analysis was for.

The infrastructure investors should sit out. The people buying GPUs, building data centers, and financing CoreWeave’s debt—yes, they should absolutely reconsider. But you, building tools and applications at the application layer, are in a completely different position. You should be building right now, with discipline and urgency.

Here’s why the entire analysis changes when you’re not the one buying the depreciating hardware.

⚡ The Critical Distinction Nobody’s Making

The first four parts of this series analyzed the AI boom through one lens: infrastructure investment. Should you buy GPUs? Should you invest in companies building data centers? Should you bet on Oracle’s $100 billion gamble? For those questions, the answer was clear—mostly no. The depreciation risk is real, the unit economics are questionable, and circular financing creates systemic vulnerabilities that echo 2008.

But there’s a completely different question that requires a completely different analysis: Should you build applications that use this infrastructure?

Think about the difference. An infrastructure investor buys H100 GPUs at $30,000 each, watches them depreciate to $10,000 in three years, and hopes to recoup the investment through rental income. They’re exposed to technological obsolescence, market timing, and the risk that new chip architectures make their entire fleet worthless. They’ve deployed capital into physical assets that lose value every month.

You, on the other hand, rent compute from AWS on a month-to-month basis. You have no capex exposure. Your costs scale directly with your revenue. You’re hardware agnostic—if Anthropic’s Claude becomes cheaper than OpenAI’s GPT, you switch with a config change. And here’s the part that makes all the difference: when those H100s lose half their value, AWS passes the savings to you through lower API prices. Your inference costs drop by 10×, your margins expand, and you capture the upside without ever touching the downside.

This is the railway equivalent of using the trains versus owning the tracks. One is a capital-intensive bet on infrastructure holding value. The other is paying for transport and benefiting as competition drives prices down. The risk profiles aren’t just different—they’re inverses of each other.

⏰ Why This Moment Won’t Last

The infrastructure boom has created a temporary window that won’t repeat. Right now, AWS, Azure, and GCP are desperately trying to fill capacity. They’ve overbuilt—whether rationally or not doesn’t matter to you—and they need workloads. This means startup programs, cloud credits, and aggressive API pricing to win market share. Model providers are locked in competition, which translates to you getting GPT-4-level capabilities at prices that were unthinkable 18 months ago.

But this abundance won’t last forever. When the correction hits in 2027-2029, cloud providers will rationalize pricing. The free credits dry up. The aggressive customer acquisition spending stops. If you’re not already in production, already generating revenue, already proving unit economics work, you’ll be trying to start a company in a capital drought with higher costs and tighter budgets.

The second reason this moment matters is that current models are good enough for most valuable applications. You don’t need AGI to build a code generation tool that developers will pay $20 a month for. You don’t need artificial general intelligence to build a document management system with semantic search. You don’t need breakthrough capabilities to build vertical SaaS for legal contract analysis or healthcare documentation.

If you’re waiting for “better models,” you’re making an excuse. GPT-4 and Claude 4 are sufficient for building real businesses today. The people waiting for GPT-5 or AGI are the same people who waited for the “right time” to start a company and never did. The right time is when you can ship something customers will pay for, and that time is now.

The third reason is customers have budget. Enterprises allocated AI spending for 2025 and 2026. Microsoft is selling Copilot at $30 per user per month to millions of people. GitHub Copilot has millions of paying subscribers at $10-20 per month. This is real money being spent right now. If you can build something that delivers clear ROI, enterprises will buy it from their AI budgets. But after the correction, those budgets get cut. The window to capture that spending is the next 18 months.

🎯 The 18-Month Revenue Rule

If you take one thing from this entire series, make it this: get to meaningful revenue in 18 months or don’t build at all.

The math is brutal but clear. If you start building today, you need to ship an MVP by late 2025, get your first revenue by early 2026, and have real traction by mid-2026. That’s because the infrastructure correction likely begins in 2027. When it hits, capital markets freeze. Series B rounds become difficult or impossible. Customer budgets tighten. The companies that survive are the ones already generating revenue with proven unit economics.

What does “meaningful revenue” actually mean? It’s not 10 customers paying $100 a month. It’s not a free tier with a vague conversion funnel. It’s not pilots that might convert eventually. Meaningful revenue is $500,000 in annual recurring revenue with a clear path to $2 million, with unit economics that work. That means lifetime value over three times your customer acquisition cost, month-over-month growth above 15%, customer retention above 85%, and gross margins above 70%.

Why such a high bar? Because the correction will be unforgiving. When capital dries up and budgets tighten, only the companies with strong fundamentals survive. The ones burning cash hoping to “figure out monetization later” go to zero. The ones with negative unit economics can’t cut to profitability fast enough. The bar is high because the alternative is dying.

The timeline works like this: you have 18 months from today to hit meaningful revenue. That puts you at mid-2026. The correction likely starts in 2027. You need 12 to 18 months of revenue traction before the correction hits, because that’s what gives you options. With proven revenue and strong unit economics, you can raise a Series B preemptively in late 2026 and sit out the storm with a cash buffer. You can cut to profitability and grow organically. You can even get acquired by Microsoft or Google, who will absolutely buy revenue-generating AI companies before the correction.

Without revenue by mid-2026, you have no options. You can’t raise because investors want traction in a tightening market. You can’t get acquired because you have nothing to sell. You can’t cut to profitability because you were never profitable to begin with. You just run out of runway during a capital drought, and that’s the end.

This isn’t meant to be comforting. It’s meant to be realistic. The infrastructure boom created a window, and that window is closing. If you’re going to build, you need to move with urgency.

🏗️ Who Should Actually Build

Not every application-layer company has the same risk profile. Let me be precise about which categories make sense right now.

Developer tools have the highest confidence. We’re talking about code generation like Cursor and GitHub Copilot, automated documentation, testing tools, debugging assistants. Developers will pay $10 to $100 per month per seat for tools that save them time. The ROI is clear—time saved equals money saved—and usage-based pricing aligns incentives perfectly. Once a developer adopts a tool into their daily workflow, switching costs are high. You don’t change your code editor lightly.

The revenue timeline is fast. Six to 12 months to meaningful revenue is realistic because developers are early adopters. They’ll try your tool immediately if it’s good. The risk level is low because demand is proven—GitHub Copilot has millions of paying users—and the business model works. If you’re building developer tools, build with urgency. This is the best category to be in right now.

Vertical SaaS with AI features comes next. Legal tech for contract analysis and research. Healthcare tools for clinical documentation and diagnosis support. Financial services for risk analysis and fraud detection. Startup operating systems for execution tracking and knowledge management. These work because enterprises pay premium prices, often $500 to $5,000 per month per account. Regulation creates defensible moats. Domain expertise is required, which means the tools are hard to replicate. And critically, AI enhances existing workflows rather than trying to replace them entirely, which makes adoption easier.

The revenue timeline is longer—12 to 18 months to meaningful revenue because enterprise sales cycles take time. The risk level is medium. You need genuine domain expertise, not just engineering skill. You need patience for long sales processes. But if you have those things, this category offers sustainable businesses with real moats.

Knowledge and document management platforms sit in a similar space. These are tools like Notion AI, team knowledge bases, research platforms, document generation systems. The reason these work is network effects—more documents means better search and better insights. Switching costs are real because once a team has six months of knowledge in your system, migration is painful. And the value compounds over time. Knowledge assets appreciate, unlike hardware that depreciates.

The catch is you absolutely need vector search infrastructure. This isn’t optional. Semantic search is your core value proposition. “Find documents similar to this one” requires vector similarity. “Ask AI questions across all documents” requires RAG with embeddings. If you try to build this with keyword search alone, you’re not competitive. So you need Aurora PostgreSQL with pgvector, or AWS Knowledge Base with OpenSearch Serverless, or a specialized vector database like Pinecone. That’s table stakes.

The categories I’m skeptical about are horizontal productivity tools competing directly with Microsoft and Google. AI note-taking, writing assistants, meeting transcription. The problem is Microsoft is integrating AI into Office and Google is doing the same with Workspace. You’re competing with bundled products that are often free or very cheap. The differentiation is hard, the platform risk is real, and customer acquisition costs are high. Unless you have a unique moat—proprietary data, strong network effects, exclusive distribution channel—these are tough businesses to build right now.

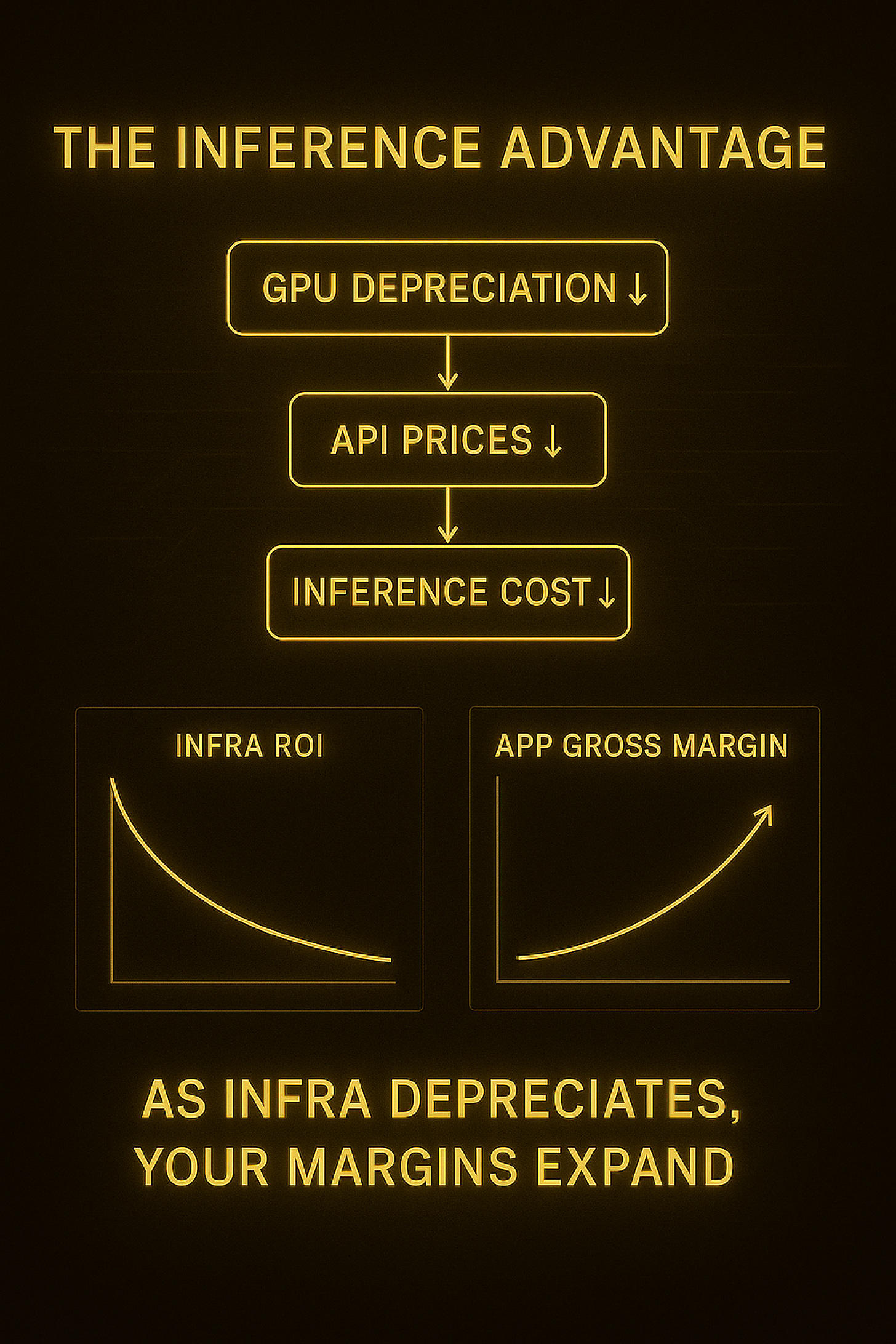

📈 The Inference Advantage

Here’s the most counterintuitive insight from this entire series: infrastructure depreciation makes your business better, not worse.

Let me show you the math. Today, GPT-4’s API costs about $10 per million input tokens. If your application makes AI queries on behalf of users, your cost per query might be one cent. You charge $50 per month for a user who makes 5,000 queries. Your cost of goods sold is $50, your revenue is $50, your gross margin is 90%.

Now fast forward to 2027 after the infrastructure correction. H100 GPUs have depreciated. There’s overcapacity in the market. Model providers are competing on price. Suddenly GPT-4-equivalent capabilities cost $1 per million tokens—10× cheaper than today. Your cost per query drops to a tenth of a cent. You still charge $50 per month. Your cost of goods sold is now $5. Your gross margin is 99%.

You captured the margin expansion. You did nothing except wait while infrastructure depreciated, and your profitability exploded. Meanwhile, the infrastructure investor who bought those H100s paid $30,000 per GPU in 2024 and now owns hardware worth $10,000. They lost $20,000 per GPU. You never owned the GPU. You just rented compute. When it depreciated, AWS absorbed the loss and passed cost savings to you through lower prices.

This is why the railway analogy actually works, just not in the way people think. Infrastructure investors are like the people who bought locomotives in 1850, watched them become obsolete by 1870, and hoped to make money in the narrow window before technological progress made their investment worthless. You’re like the passengers who bought train tickets and benefited as competition between rail companies drove prices down. One group took all the depreciation risk. The other group captured all the consumer surplus.

The infrastructure boom creates a temporary subsidy for application builders. Cloud providers overbuild capacity, whether for rational or irrational reasons. Competition drives prices down because they need to fill that capacity. You benefit from cheap compute without having to buy the hardware yourself. And as costs drop 10× over the next five years, your margins expand continuously. This is the prosperity phase that Part 4 said doesn’t exist for infrastructure. But it absolutely exists for applications. The trains rust, but the passengers benefit from cheap, abundant transport.

🚫 What Not to Do

Even at the application layer, there are ways to fail spectacularly. Let me be clear about the anti-patterns.

Don’t build your own infrastructure. This seems obvious after everything we’ve covered, but I still see founders saying “we’ll train our own models for differentiation” or “we’ll buy GPUs for cost savings” or “we’ll build our own vector database from scratch.” This is a mistake. It requires capital you don’t have, expertise you likely don’t have, and it distracts from building your actual product. It exposes you to exactly the depreciation risk we’ve been discussing. Stay asset-light. Rent everything. Use managed services. Focus on your application logic and user experience, not on becoming an infrastructure company.

Don’t give away free product indefinitely. The pattern I see constantly is founders saying “we’ll get to a million users and then figure out monetization” or “network effects will create value before we charge” or “freemium conversion will happen later.” This fails because the correction hits before you monetize. Free users don’t convert when budgets tighten. You run out of runway. And critically, you never get feedback on willingness to pay. Charge by month six at the latest. A small price is infinitely better than zero. Get real signal on whether anyone values what you’re building.

Don’t bet on AGI arriving. If your product pitch is “this only works with AGI-level capabilities” or “we’re building for the post-AGI world” or “current models aren’t good enough yet,” you’re making excuses for not shipping. AGI might happen—I’d put it at 10 to 15 percent probability by 2028—but that’s not high enough to bet your company on it. Build for current capabilities. Ship now. You can always incorporate better models when they arrive, but if you wait for AGI, you miss the window entirely.

Don’t lock into a single model provider. If you hard-code OpenAI everywhere in your stack, you can’t optimize costs, you can’t adapt to pricing changes, and you’re vulnerable if they have outages or go away. Build model-agnostic architecture from day one. Support GPT, Claude, and open-source models. Abstract your model calls behind your own interface. Route different types of requests to different models based on cost, latency, and quality requirements. Your competitors who locked into OpenAI will have COGS that are 10× yours, and that margin difference compounds every month.

And finally, don’t ignore unit economics. If you’re spending a dollar in customer acquisition cost to generate 50 cents in lifetime value, you don’t have a business, you have a money-losing operation that dies when funding dries up. Know your CAC, know your LTV, track them obsessively from day one. Target LTV to CAC ratio above three to one at minimum. Target gross margins above 70 percent. Track cohort retention religiously. Fix the economics before you scale spending, because you can’t raise your way out of broken unit economics in a down market.

🛡️ Positioning for the Correction

The infrastructure correction is coming in 2027-2029. Here’s how to position yourself to not just survive, but thrive.

If you have revenue by mid-2026, you’re in an excellent position. You have three options, and you can choose based on your goals and circumstances. First option: cut to profitability by the fourth quarter of 2026, then grow 30 to 50 percent annually without outside capital. You survive the correction independently, you acquire distressed competitors at fire-sale prices in 2028-2029, and you emerge as a category winner by 2030. This is the organic growth path.

Second option: raise your Series B preemptively in late 2026 before the correction hits. Get 24 to 36 months of runway, sit out the storm with a cash buffer, and use others’ distress as competitive advantage. You’re not desperate, you’re well-capitalized, and you can hire talent that becomes available at 50 to 70 percent of 2026 compensation levels.

Third option: get acquired. Microsoft, Google, and Salesforce will absolutely buy revenue-generating AI companies before the correction hits. If you’re doing $2 million in annual recurring revenue with 100 percent year-over-year growth and strong unit economics, you can exit at 10 to 20× revenue multiples in 2026. That window closes when the correction hits and valuations crater.

The key metric is this: if you’re generating $2 million-plus in ARR with triple-digit growth and unit economics that actually work—LTV to CAC above five to one, gross margin above 75 percent—you have real options. You’re not at the mercy of the market. You choose your path.

But if you don’t have revenue by mid-2026, you’re in serious trouble. You can’t raise because investors want traction in a tight market. You can’t get acquired because you have no revenue and therefore no strategic value. You can’t cut to profitability because you were never profitable to begin with. Your runway runs out during a capital drought, and that’s the end. The emergency playbook at that point is brutal: pivot to revenue immediately even if it means dropping all your free users, cut burn by 50 to 75 percent, accept a bridge round at a down valuation if you can get it, or shut down gracefully and preserve your reputation for the next venture.

This is why the 18-month revenue rule isn’t aspirational—it’s existential. The correction will be merciless to companies without revenue and proven unit economics.

💎 Why the Correction Actually Helps You

This sounds counterintuitive, but the 2027-2029 correction creates massive opportunities for disciplined application builders. Let me explain why.

First, your costs plummet. When infrastructure depreciates, your inference costs drop 50 to 90 percent. Model API pricing craters because there’s overcapacity and providers need workloads. Vector database costs fall because cloud providers are desperate for customers. Your gross margins expand from 80 percent to 95 percent with no effort on your part. That’s pure profit expansion that you can reinvest in growth while your competitors are struggling to survive.

Second, talent becomes available. The infrastructure boom employed thousands of engineers at OpenAI, Anthropic, CoreWeave, and dozens of AI startups. When the correction hits, these companies lay off or shut down. Top-tier talent enters the market. You can hire at 50 to 70 percent of 2026 compensation levels and dramatically upgrade your team while your well-capitalized competitors are doing the same thing you are.

Third, you can acquire distressed assets at fire-sale prices. Struggling competitors sell for one to two times revenue instead of 10 to 20×. You get their team, their customer list, and their technology for pennies on the dollar. You consolidate market share. Customers whose vendors shut down need new solutions, and you’re the obvious choice because you’re still operating. You land customers at a tenth of your normal customer acquisition cost.

And fourth, competition clears out dramatically. The companies that die in the correction are predictable: companies with no revenue, companies with negative unit economics, companies that overborrowed, companies that depended on continued hype to raise their next round. The survivors are you and other disciplined builders with proven revenue, plus the well-capitalized giants like Microsoft and Google. The market becomes dramatically less noisy. Customers consolidate to proven vendors. It’s easier to gain share because there’s better signal-to-noise for buyers.

You’re not just surviving the correction—you’re positioned to dominate coming out of it. That’s what happens when you build with discipline while everyone else burns money.

🎲 The Four Scenarios and What They Mean for You

Let me walk through the four probability scenarios from Part 4, but from your perspective as an application builder, not an infrastructure investor.

Scenario one: AGI by 2028, which I’d put at 15 percent probability. If this happens, capabilities jump 10× almost overnight, many software jobs get automated, and your application is either obsolete or far more valuable depending on what you built.

For developer tools, the risk is that AGI writes better code than your tool, making you obsolete. The opportunity is that your tool helps humans work alongside AGI, making you more valuable. The key question is whether you positioned yourself as a replacement for developers or as an augmentation tool. If you built for human-AI collaboration rather than full automation, you survive and potentially thrive. Your hedge here is making sure your product works well in a world where AI is vastly more capable but humans are still in the loop.

For knowledge management platforms, this scenario is actually positive. Knowledge management is still needed even with AGI. In fact it becomes more valuable because AGI needs to query your structured knowledge base to be useful. Your data moat strengthens because you have the proprietary information that generic AGI doesn’t have. Your hedge is making your API AGI-compatible and positioning as the “knowledge layer” that AGI systems query.

For vertical SaaS, domain expertise becomes more valuable, not less. AGI is general; you’re specialized. Regulatory moats strengthen because compliance requires human oversight regardless of AI capabilities. Your customer relationships deepen because trust matters more when AI is involved in critical decisions. Your hedge is doubling down on compliance, domain knowledge, and human-in-the-loop workflows.

If AGI arrives, don’t fight it. Integrate it immediately. Pivot from “AI tool” to “AI workflow orchestration.” Your moat is data and domain expertise, not raw capability. Companies with proprietary data win, and that’s you if you built correctly.

Scenario two: incremental progress, which I’d put at 50 percent probability. This is the base case you should plan for. Models improve 20 to 30 percent per year but there’s no phase change. Enterprise adoption grows steadily but not explosively. The infrastructure correction happens in 2027-2029 but it’s not catastrophic. And critically, the application layer continues thriving throughout.

In this scenario, you execute exactly the plan I’ve laid out. Revenue growth continues on trajectory. Your margins expand as compute costs drop 50 to 70 percent. The correction creates M&A opportunities where you acquire distressed competitors. You emerge stronger post-correction because you had discipline and they didn’t. This is the scenario where patience and execution pay off massively. By 2029 you’re a category leader, not because you were the flashiest or raised the most money, but because you were still standing when the music stopped.

Scenario three: capabilities plateau, which I’d put at 25 percent probability. GPT-5 is only incrementally better than GPT-4. Scaling laws break. Enterprise adoption slows. AI hype fades significantly. The infrastructure correction is severe.

For developer tools, the risk is that if you need better models to deliver value, your growth stalls. But if current capabilities are sufficient, you’re fine. The key question is whether your product works well with GPT-4-level capabilities. If yes, you’re insulated. If no, you have a problem.

For knowledge management, you’re actually well-positioned. Semantic search doesn’t need AGI. Your product still delivers value with current models. Domain expertise and data moats matter more than raw AI capability. Your hedge is emphasizing workflow and user experience rather than “AI-powered” in your marketing.

For vertical SaaS, you’re in the best position. Domain expertise always mattered more than AI capability anyway. Regulatory moats strengthen. You’re a solution that uses AI, not an AI solution, and that distinction matters. Your hedge is de-emphasizing AI in your pitch and emphasizing vertical domain value.

If plateau happens, pivot your messaging from “AI-powered” to “domain expert tool with AI assistance.” Emphasize non-AI moats like data, integrations, compliance, and workflow. Double down on user experience and reliability. Many “AI companies” will die as hype fades. You survive because you were always a real business that happened to use AI, not an AI business trying to find a use case.

Scenario four: efficiency gains, 10× improvement in algorithmic efficiency, which I’d put at 10 percent probability. This is your best-case scenario despite the low probability. DeepSeek-style breakthroughs proliferate. Same capability with 10× less compute. Model inference costs crater overnight.

In this scenario, your cost of goods sold drops 90 percent immediately. Your gross margins explode from 80 percent to 95-plus percent. You keep pricing stable and pocket the entire margin difference. If you’re doing a million dollars in revenue with $200,000 in COGS today—80 percent gross margin—then after 10× efficiency you’re doing a million in revenue with $20,000 in COGS—98 percent gross margin. You just gained $180,000 in pure margin expansion, which is a 23 percent increase to your bottom line with zero effort.

You switch to the most efficient models immediately, which gives you a competitive advantage. Don’t pass the cost savings to customers initially—capture the margin. Use that margin expansion to out-spend competitors on sales and marketing, build features faster, expand internationally, and acquire competitors. Eventually pass some savings to customers and become the price leader. Dominate through superior economics.

Infrastructure investors holding expensive GPUs get crushed in this scenario. You with zero capex exposure capture pure upside. This is why efficiency gains are amazing for you—you get 100 percent of the benefit in your P&L.

⚙️ The Technical Choices That Actually Matter

Let me get tactical on the infrastructure decisions that will determine your margins and flexibility over the next three years.

For vector databases, you have three real choices. First is Aurora PostgreSQL with pgvector, which gives you one database for everything—structured data and vectors together. The architecture is simple, it’s cost-effective at scale, and SQL is familiar to most developers. The downside is that scaling vectors requires tuning, but for most applications this is the right choice. Start here unless you have a specific reason not to.

Second is AWS Knowledge Base with OpenSearch Serverless, which is fully managed and AWS-native. It scales automatically and requires no ops overhead. The downside is it’s more expensive than self-managed and you have less control over configuration. This makes sense if you’re AWS-committed and want a managed solution where you trade some cost for operational simplicity.

Third is specialized vector databases like Pinecone, Weaviate, or Qdrant. These are purpose-built for vectors with the best performance and excellent developer experience. The downside is they’re an additional service to manage and can be expensive at scale. Only go this route if vector search is 80 percent-plus of your value proposition and you need the absolute best performance.

My recommendation for most teams: start with Aurora PostgreSQL plus pgvector. Simpler architecture means fewer failure modes. Migrate to a specialized vector database only if scaling demands it. Don’t over-engineer early.

For model routing, build this infrastructure early even though it seems like extra work. Don’t hard-code which model you call. Build a routing layer that sits between your application and the various model providers. Your routing logic should consider cost, latency, quality, and availability. Route cheap queries to cheap models. Route time-sensitive requests to fast models. Route complex analysis to the best models. Fail over to backups if your primary is down.

Why this matters: you capture every cost reduction immediately. You optimize spend without code changes. You hedge against any single provider’s pricing or availability. Your competitors who hard-coded OpenAI everywhere will have cost of goods sold that’s 10× yours, and that margin difference compounds every month.

On caching, implement aggressive caching from day one because it’s essentially free money. Cache embedding generations—same text produces same embedding, so cache forever. Cache common queries, especially “what is X” type questions. Cache template-based generations. Cache search results for popular queries. The impact is massive—you can reduce inference costs by 40 to 60 percent while improving latency because cached responses are instant. If you’re spending $10,000 a month on inference, caching saves $4,000 to $6,000. One engineer implementing this pays for themselves five times over.

For observability, instrument everything so you actually know your unit economics at a granular level. Track which model you used for each request, tokens consumed, cost per request, latency, cache hit or miss, and user ID so you can calculate lifetime value. This matters because you need to know which users are profitable versus loss-making. You need to identify optimization opportunities. You need to prove ROI to enterprise customers. You need to make data-driven architecture decisions. Build custom dashboards that show revenue per user versus COGS per user, and watch this religiously. This is your most important metric.

💡 The Uncomfortable Truth

Let me synthesize everything: the infrastructure boom is a bad investment for people buying GPUs and building data centers. Parts 1 through 4 were completely correct about that. But the infrastructure boom creates a golden, temporary opportunity for application builders, and that’s a completely different analysis.

You should build now because compute is temporarily subsidized. Cloud providers overbuilt and need workloads. Model providers are pricing aggressively for market share. You benefit from their competition without taking any of their risk.

You should build now because models are good enough today. You don’t need AGI. Current capabilities handle 80 percent-plus of valuable use cases. Waiting for better models is procrastination, not strategy.

You should build now because depreciation helps you rather than hurts you. As infrastructure depreciates, your costs drop 10×. You capture margin expansion. Infrastructure investors eat the losses. You have pure upside with zero downside.

You should build now because customers have budget. Enterprises allocated AI spending for 2025-2026. First movers capture this budget. After the correction, budgets tighten. Get revenue now, survive the correction later.

And you should build now because competition is distracted. They’re burning cash on user growth rather than building sustainably. They’re focused on hype and fundraising rather than unit economics. They’re giving away products for free rather than proving willingness to pay. You can win with discipline while they burn money.

The window is 2025 to 2027. This is not forever. Act with urgency. Build and launch in 2025 and 2026. Get to meaningful revenue—$500,000-plus in ARR—by mid-2026. Be profitable or well-capitalized by late 2026. When the correction hits in 2027-2029, you thrive. Your costs crater, you acquire competitors, talent becomes available at steep discounts, and competition clears out. You emerge as a category winner by 2030.

If you execute this timeline, you win in 85 percent-plus of scenarios. AGI arrives: you pivot fast and leverage your data moat. Incremental progress: you execute the plan, this is the base case. Capabilities plateau: your domain expertise matters, you’re fine. Efficiency gains: massive margin expansion, you dominate.

This is the difference between infrastructure and applications. The analysis changes completely. The risk profile inverts. Infrastructure investors should sit out. Application builders should build.

Parts 1 through 4 analyzed why you shouldn’t buy the tracks. This part explains why you should absolutely use the trains—especially while tickets are cheap and the trains are running everywhere you need to go.

The infrastructure boom created temporary abundance. Cheap compute, desperate cloud providers, allocated customer budgets, distracted competition. That window closes in 2027. Build now. Get to revenue in 18 months. Position for the correction. When it hits, you’ll be profitable with expanding margins while infrastructure investors write off billions.

You’re not riding the bubble. You’re using the bubble’s temporary abundance to build something real that survives and thrives after it pops. That’s the difference. That’s why the analysis changes completely. That’s why you build.

This concludes the 5-part series on the Great AI Infrastructure Build-Out of 2025.

The full series:

The full series:

The synthesis: infrastructure is a bad investment with high depreciation risk and unclear ROI. Applications are a compelling opportunity that benefit from depreciation. The window is 2025-2027. Get to revenue before the correction. Execute with discipline. Position to thrive when infrastructure investors are writing off losses. Emerge as a category winner.

Now go build.

Thank you for reading. If you liked it, share it with your friends, colleagues and everyone interested in the startup Investor ecosystem.

If you've got suggestions, an article, research, your tech stack, or a job listing you want featured, just let me know! I'm keen to include it in the upcoming edition.

Please let me know what you think of it, love a feedback loop 🙏🏼

🛑 Get a different job.

Subscribe below and follow me on LinkedIn or Twitter to never miss an update.

For the ❤️ of startups

✌🏼 & 💙

Derek